A/B testing is a method used to compare two versions of a product, service, or marketing campaign to determine which one performs better. It is a crucial tool for optimizing websites, applications, and digital marketing campaigns, and it is used by businesses of all sizes to increase conversions and make data-driven decisions.

A/B testing is frequently used in UX and compares differences that “could be almost anything from a small design detail to a radically new layout” (Pannafino 6). In addition to comparing designs, A/B testing allows us to track the user’s clicks, providing metrics that call out pain points we didn’t know were there. VWO recommends following click paths with tools like heatmaps, Google Analytics, and website surveys. The data gathered from A/B testing can also contribute to increased ROI. Forrester found that “on average, every dollar invested in UX brings 100 in return. That’s an ROI of 9,900%.” Identifying troublesome areas with data from A/B testing lets us target the exact problem while assessing multiple solutions.

In an A/B test, a random sample of users is exposed to one product version (Version A), while another is exposed to a second version (Version B). The goal is to determine which version is more effective by measuring a specific metric, such as the click-through or conversion rate.

Running an A/B Test

To execute an A/B test, it is essential to follow a few steps:

- Define your hypothesis: Before starting an A/B test, it is crucial to clearly understand what you want to test and what you hope to achieve. An idea can be as simple as trying a new button color to see if it increases clicks or as complex as testing a new pricing model.

- Determine your metric: Decide on the metric you will use to measure the success of your test. Metrics could be anything from the number of clicks, conversions, or sales to time spent on the site or pages visited.

- Select your audience: Choose the group of users participating in the test. This sample could represent all users or a segment of users based on demographics or behavior. Pannafino recommends getting the design in front of at least ten users, but the sample size will vary depending on your goal and available resources.

- Set up your test: Choose the tools you will use to execute your test, and set up the two versions of your product or service.

- Pilot the Activity: A pilot moves through the test as though it is the actual session. It is more than a practice run; according to Baxter, pilots help debug everything from the demo to recording gear that will collect quantitative and qualitative data.

- Run the test: Run the test for sufficient time to gather enough data to make a meaningful decision. Test length can vary depending on the size of your audience and the test’s complexity, but running an A/B test for at least 2-4 weeks is generally recommended. At a minimum, results can take 48 hours, but UX Design Institute notes that this timeframe will vary with sample size. The test must run for the appropriate length; if not, your results will not be statistically significant and not adequately represent the population you’re trying to reach.

- Analyze the results: Once the test is complete, analyze the data to determine which version performed better based on your metric.

A/B Testing Tools

A/B testing is more complicated than other UX research methods (such as 2×2 matrices or business origami), but several tools can be used to execute A/B tests.

- Google Optimize: A free tool offered by Google that allows you to set up and run A/B tests on your website efficiently.

- Optimizely: A popular paid tool that offers a range of features for A/B testing, including the ability to test multiple variables simultaneously. It also has a visual editor that lets you make site changes while still seeing detailed analytics and results.

- VWO: Another paid tool that offers a range of features for A/B testing, including heatmap tracking and real-time reporting. It lets you easily create and manage tests while providing analytics on behavior and test results.

A/B Testing Usage & Results

The use of A/B testing has been the subject of several research studies in recent years. Their power allows businesses to make informed decisions based on human behavior and data to see real-world results. Testing variables independently lets companies be sure they are making the best decision for the most user-friendly and effective website for their goals.

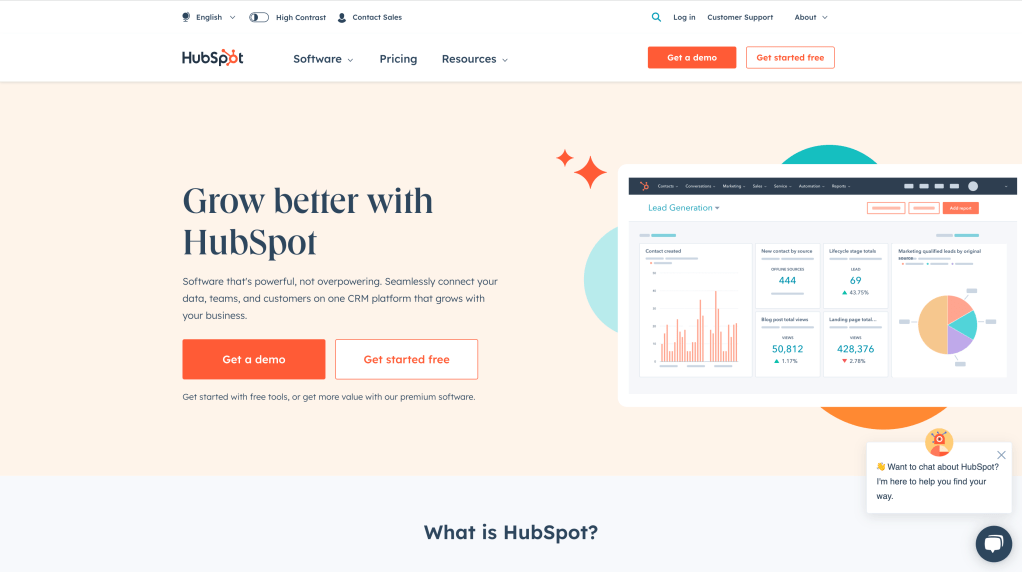

HubSpot, a developer, and marketer of software products for marketing, sales, and customer service, used A/B testing when considering how best to give users the ability to search for a topic or term. Riserbato writes that they used three variations for the test. HubSpot found that all three options increased the conversation rate but version C, which had increased visual prominence with placeholder text that said “search the blog” and only searched the blog rather than the whole site, was the most effective.

Another example of successful A/B testing comes from WallMonkeys, a company that sells wall decals for homes and businesses. Crazy Egg first assessed the landing page with a heat map and found that most activities were on the headline, CTA, logo, and search and navigation bar. With this information, they decided to run A/B testing that compared stock imagery with “a more whimsical alternative that would show visitors the opportunities they could enjoy with WallMonkey products.” They also tested a more prominent search bar to determine if users would search for specific items. The changes resulted in a conversation rate increase of 550%.

Conclusion

A/B testing is an excellent tool for businesses of all sizes to make the most of their products, services, and marketing campaigns. Comparing two versions of a product allows you to make data-driven decisions that result in higher conversion and ROI while eliminating guesswork. Defining a hypothesis, metrics, and audience will guide you through A/B testing for results that target the precise problem every time.

Sources

“AB Testing.” VWO, vwo.com/ab-testing/.

Baxter, K., Courage, C., & Caine, K. (2015). Understanding your users: A practical guide to user research methods. Elsevier Science.

Carter, Chris. “Why More Companies Are Putting Big Money Into UX.” Adobe Blog, 8 May 2017, blog.adobe.com/en/publish/2017/05/08/why-more-companies-are-putting-big-money-into-ux.

“Benefits of A/B Testing.” UX Design Institute, 18 Sept. 2019, https://www.uxdesigninstitute.com/blog/benefits-of-a-b-testing.

“Understanding Statistical Significance.” Nielsen Norman Group, nngroup.com/articles/understanding-statistical-significance/.

“A/B Testing Experiments: Examples & Best Practices.” HubSpot, 7 Sept. 2020, blog.hubspot.com/marketing/a-b-testing-experiments-examples.